- #How to install apache spark for scala 2.11.8 how to#

- #How to install apache spark for scala 2.11.8 code#

- #How to install apache spark for scala 2.11.8 download#

I) The shift from storage to computational powerĪpache Spark is at the center of smart-computation evolution because of its large-scale, in-memory data processing. With increasing adoption of Apache Spark in the industry as big data grows, here are five big data trends that deserve attention. This partnership will provide data scientists with the ability to auto-scale cloud based clusters to handle jobs whilst keeping the overall TCO low.

#How to install apache spark for scala 2.11.8 code#

Provide simplified access to large datasets as all datasets will be accessible in the Unified Analytics Platform and data scientists can work on the code in RStudio.ĭata scientists will be able to use famiair tools and languages to execute R jobs resulting in enhanced productivity among the data science teams. This collaboration will help data science teams in the following ways. This collaboration will remove all the major roadblocks that put a fullstop to several R-based AI and Machine Learning projects. This collaboration will let the two companies integrate Databricks Unified Analytics Platform with RStudio server to simplify R programming on big data for data scientists.

In one sentence, Storm performs Task-Parallel computations and Spark performs Data Parallel Computations. Individual computations are then performed on these RDDs by Spark's parallel operators.

In Spark streaming incoming updates are batched and get transformed to their own RDD. Spark and Storm have different applications, but a fair comparison can be made between Storm and Spark streaming. Storm is generally used to transform unstructured data as it is processed into a system in a desired format. Apache Spark is fault tolerant and executes Hadoop MapReduce jobs much faster.Īpache Storm on the other hand focuses on stream processing and complex event processing. RDDs are immutable and are preffered option for pipelining parallel computational operators. Apache Spark uses Resilient Distributed Datasets (RDDs).

#How to install apache spark for scala 2.11.8 how to#

#How to install apache spark for scala 2.11.8 download#

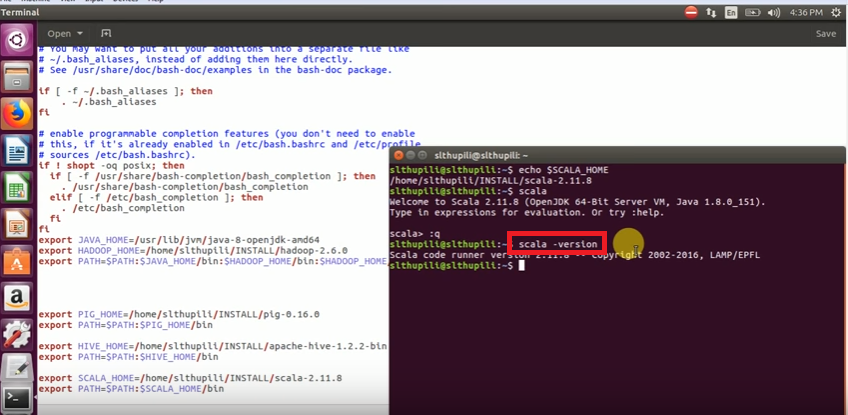

Set SCALA_HOME and add %SCALA_HOME%\bin in the PATH environmental variable. Download Scala 2.10.x (or 2.11) and install.Set PATH and JAVE_HOME as environment variables. Install Java 6 or later versions(if you haven't already).The prerequisites to setup Apache Spark are mentioned below: This short tutorial will help you setup Apache Spark on Windows7 in standalone mode.

0 kommentar(er)

0 kommentar(er)